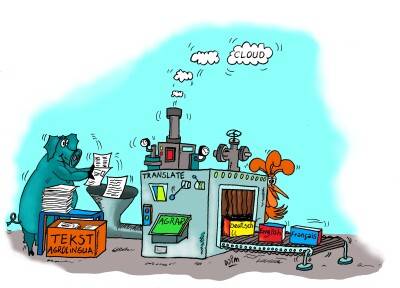

From primitive program to self-learning technology: how has machine translation changed over time?

Google Translate, DeepL, Reverso Context: chances are that you have used one or more of these translation tools. But when was the idea of machine translation originally conceived and how has it developed?

History

The origins of machine translation go all the way back to the 1950s. Warren Weaver, an American scientist and mathematician, is seen as one of the pioneers of machine translation. In 1949, he published the memorandum “Translation", in which he wrote that the essence of a language involves much more than a choice of words. For example, he stated that sentence structure plays a significant role in the translation of texts. According to Weaver, the art of translation is a complex task that requires much more than simply translating a text word for word. His publication inspired researchers to initiate experiments with machine translations, which lead to the Georgetown-IBM experiment in 1954. The aim of this test was to demonstrate the capabilities of machine translation by translating Russian sentences into English, with the help of a computer. However, the experiment wasn’t a great success. The computer was unable to understand the nuances of the languages and the context in which the texts were written, resulting in a poor quality translation. This experiment highlighted the challenges facing machine translation and the complexity of language comprehension, particularly in relation to languages with different grammatical structures, such as Russian and English.

Despite the disappointing results, this experiment formed the starting point for further research and development. It inspired researchers to continue working on higher quality machine translations, which ultimately led to the more advanced translation tools available to us today.

Rule-based machine translation (RBMT)

The first machine translations were anything but accurate. The reason for this was that the systems at the time were based on simple rules of grammar and dictionary definitions; this is also known as “rule-based machine translation (RBMT)” . The Georgetown-IBM experiment was one of the first attempts to develop a RBMT system. This meant that texts were translated in a very literal sense - pretty much word for word - and resulted in extremely awkward, often incomprehensible translations.

Statistical machine translation models

Statistical machine translation models were developed in the 1990s and caused a revolution in machine translation. These models are based on percentages and probability: large amounts of bilingual texts are analysed to determine patterns and correlations between words and sentence structures. The statistical translation models were able to produce higher quality translations than RBMT systems, based on these statistics. Although these translations were by no means perfect, the quality was significantly higher compared to the very literal translations produced by RBMT systems.

Neural networks

Although statistical machine translation models ensured a higher quality of translation, the real breakthrough came with the rise of neural networks. These networks are 'trained' to use large volumes of text to develop translation models that understand the meaning of words and sentences, as well as the context in which they are used. This method is known as neural machine translation (NMT). As these models are more successful in understanding context, traditional statistical machine translation models have largely been replaced. Google Translate and DeepL are two famous examples of translation machines that use neural networks.

Deep learning

Deep learning is a type of machine learning that uses artificial neural networks; these consist of multiple layers (hence the name “deep"). To translate a text, the model requires massive amounts of data from both languages, so that the system learns how to match sentences. The translation models are continuously optimised, enabling the system to learn from its mistakes. As more and more texts are translated, the accuracy and overall quality of the system increases.

Future perspective

Deep Learning has played a crucial role in the continued improvement of machine translations. The expectation is that they will become more accurate and gain an improved understanding of language nuances and contexts. Although enormous progress has been made, machine translations are still far from perfect. For example, a source text might say that you shouldn’t do something, whereas the translated text states the opposite. This can have far reaching consequences, particularly when translating legal documents. Cultural nuances, idiomatic expressions and sector-specific terminology are also often poorly translated.

A translation tool such as DeepL is highly likely to make mistakes when translating specialist agricultural terminology. When it comes to subject specific translations, the best option is to request the help of human translators. The native translators at AgroLingua have excellent, professional knowledge of various languages. In addition to their language skills, our translators are specialised in the agricultural sector. This enables us to translate your texts accurately and convey the right message.

Back to blogs